US Tech Force: Why It Faces Major Challenges—and How It Can Succeed Anyway

Summary

- The US Tech Force is a new effort to recruit technical experts to accelerate AI adoption in the executive branch.

- Led by the Office of Personnel Management (OPM), it ambitiously aims to hire 1,000 fellows annually, starting this year.

- There are major benefits to this kind of program, but it will face steep challenges.

- It can use a special authority to hire quickly, but standard practices will likely cause delays, such as the notoriously slow security clearance process.

- While OPM will recruit fellows, executive branch agencies will need to hire, onboard, and fund them—potentially creating friction and inefficiencies.

- Key interventions can increase the odds of the Tech Force’s success.

- Agencies can use interim security clearances to avoid months-long delays.

- Congress could provide specific funding for agencies to hire fellows.

- OPM can use its coordinating role to match fellows to agencies, rather than letting them compete for top candidates.

- The executive branch can use non-competitive eligibility and personnel exchanges with the private sector to increase the government’s return on investment.

On December 15, OPM announced a new program called the US Tech Force. The Tech Force is billed as a “cross-government program to recruit top technologists to modernize the federal government.” It intends to take on “the most complex and large-scale civic and defense challenges of our era,” running the gamut from “administering critical financial infrastructure at the Treasury Department to advancing cutting-edge programs at the Department of Defense.”

Through the program, the government plans to hire approximately 1,000 fellows each year who are highly skilled in software engineering, AI, cybersecurity, data analytics, or technical project management, to serve for one- to two-year terms. The Tech Force aims to foster early-career talent in particular, a demographic that the federal government has long struggled to recruit in sufficient numbers. To support the program, the government is partnering with private-sector companies, which will provide technical training and recruitment.

The sheer scale envisioned for the Tech Force makes it noteworthy. Compare it to the previous administration’s AI Talent Surge initiative, which hired around 250 people between October 2023-24. The U.S. Digital Corps, another similar program, had only 70 fellows in its most recent cohort in 2024. The timeline that OPM outlines for the Tech Force launch is also very ambitious: an initial pilot wave of fellows by Spring 2026, followed by the start of the first on-cycle cohort by September 2026.

While the Tech Force has huge potential, it will need to overcome the challenges inherent to rapid, large-scale talent acquisition in the federal government—and make sure that the government recoups the value of this significant investment.

Using a streamlined process and private partnership to meet the ambitious goals

Per a memo from OPM to agency heads sent the same day as the Tech Force announcement, Tech Force fellows will be hired as “Schedule A” federal employees. Schedule A is an “excepted-service” hiring authority. That means that fellows can be hired using streamlined procedures that generally shorten the hiring timeline, which otherwise runs about 100 days for the default “competitive-service” hiring used to fill most rank-and-file government positions. The difference is significant given that the Tech Force application only just closed on February 2, and at least one report says that the program is targeting start dates in March.

Notwithstanding the expedited Schedule A hiring process, OPM Director Scott Kupor has said that Tech Force fellows will still go through the normal channels for obtaining security clearances, though he noted that agencies have assured him that they will process fellows’ clearances—which typically take months or more—as efficiently as possible.

The Tech Force also involves public-private collaboration. So far, roughly thirty companies have agreed to partner with the government to support the program, including Amazon Web Services, Apple, Google Public Sector, Meta, Microsoft, Nvidia, OpenAI, Oracle, Palantir, and xAI. Per the Tech Force website, these companies can provide support in various ways, such as offering technical training resources and mentorship, nominating employees for participation in the program, and committing to considering Tech Force alums for employment after their government service has ended.

While it’s not apparent what form “technical training resources” will take, they could be quite valuable, coming from companies at the bleeding edge of AI and other critical technology. The government might therefore consider working with companies to extend such resources to other technical employees within the federal government, beyond just the Tech Force teams. This could help diffuse the knowledge and experience gains of the Tech Force program to more federal employees, magnifying the program’s overall impact.

OPM’s complicated role leading the initiative

OPM appears to have primary responsibility for the Tech Force, at least in terms of overall program administration and coordination. The Office of Management and Budget (OMB) and the General Services Administration (GSA)—which, like OPM, focus on governmentwide operations and resources—are also listed in OPM’s announcement as key players. The Tech Force’s website emphasizes that the program has the White House’s backing, with the OPM announcement specifying the involvement of the Office of Science and Technology Policy (OSTP). OSTP has had a prominent role in shaping the Administration’s AI policy, most notably the AI Action Plan released last July.

In the memo sent to agency heads, OPM explained that it will provide centralized oversight and administration for the program, including managing outreach, recruitment, and assessment of the fellows. However, individual agencies will be responsible for hiring, onboarding, and funding Tech Force fellows, with projects and assignments set by agency leadership. OPM also instructs that Tech Force teams will report directly to agency heads (or their designees).

Beyond such statements, OPM has yet to publicly outline how exactly its centralized recruitment and assessment of applicants will intersect with agencies’ responsibility for hiring fellows. Looking to other initiatives helps to show the different structures that are possible for these sorts of programs, as well as their advantages and disadvantages.

First, there’s the U.S. Digital Corps, similarly centered on short-term tech-focused appointments for early-career talent, though much smaller than the Tech Force in terms of size. In that program, the GSA served a coordinating role by pairing candidates with partnering agencies, with input from both applicants and agencies to gauge preferences and fit. While participants were formally hired by GSA, they were “detailed,” i.e., sent on assignment, to their partnering agencies for the duration of the fellowship. Contrast that with the much-larger Presidential Management Fellows (PMF) program, focused on early-career talent across a variety of disciplines. There, OPM provided centralized vetting and selected a slate of finalists, though agencies ultimately decided which if any finalists to interview and hire. For context, OPM selected 825 finalists for the PMF class of 2024.

Based on the OPM memo and other materials, the Tech Force seems closer in its intended structure and scale to the PMF program than the U.S. Digital Corps. But if that’s indeed the case, it’s worth noting some potential pitfalls of that model of which Tech Force leadership should remain aware. Notably, in the ten years before it was discontinued in 2025, on average 50% of PMF finalists did not obtain federal positions. Among other things, the fact that agencies had to pay an $8,000 premium to OPM for every PMF finalist they hired seems to have functioned as a disincentive, and the uncertainty caused by agencies’ long hiring timelines may have prompted finalists to pursue other career opportunities.

As the Federation of American Scientists suggested in the context of potential PMF reform, OPM should focus on creating a strong support ecosystem for the Tech Force to counteract these issues, including by strengthening key partnerships in agencies. Specifically, establishing dedicated and high-ranking “Tech Force Director” positions within agencies could foster a closer fit between agencies’ needs and the benefits the program can offer while continuing to share the administrative load of the program more broadly.

The goal: accelerating the government’s adoption of AI and attracting early-career talent

According to OPM, the purposes of the Tech Force are numerous. First and foremost, it aims to accelerate the government’s adoption of AI and other emerging technologies, including by deploying teams of technologists to various agencies to work on high-impact projects. That strong focus on AI is consistent with the involvement of OSTP, which has led on AI policy.

Judging from its website and other publicly available materials, the Tech Force appears to be focused to a considerable degree on modernizing the federal government’s aging digital systems, perhaps more so than the work on evaluating the capabilities and risks of frontier AI models that offices like the Commerce Department’s Center for AI Standards and Innovation (CAISI) do. Among the types of projects participants will work on, the Tech Force lists AI implementation, application development, data modernization, and digital service delivery.

Even though AI evaluation and monitoring work isn’t explicitly on the list, it fits well within the Tech Force’s large anticipated scale and its broad aim to build “the future of American government technology.” Such work improves the security of AI systems, both for commercial uses and when deployed throughout the federal government. Moreover, expressly including AI evaluation and monitoring work within the scope of the program might bolster recruitment of top talent given its intersection with high-profile national security work—interesting and valuable experience that tech experts typically can’t get outside of the government.

On the subject of recruitment, publicly available materials like the Tech Force website and OPM memo convey a focus on early-career talent, to “[h]elp address the early-stage career gaps in government.” As OPM Director Kupor noted on the heels of the Tech Force announcement, the federal government has long trailed the private sector in attracting and hiring junior talent, with only 7% of the federal workforce under the age of thirty. Kupor frames the Tech Force as part of OPM’s broader effort to “Make Government Cool Again,” infusing it with “newer ideas and newer experiences” to keep pace with rapid technological change.

The Tech Force also plans to employ “experienced engineering managers.” Those managers will lead and mentor teams comprised largely of early-career talent. While the Tech Force will primarily seek early-career talent via traditional recruiting channels, it appears that managers will be drawn mostly or perhaps even exclusively from the program’s partnering companies. OPM thus notes that the program will serve the purpose of providing mid-career technology specialists with an opportunity to gain government experience without necessitating a permanent transition.

Two major challenges for the Tech Force—and how to tackle them

1. Getting the Tech Force set up quickly

OPM is aiming for an initial pilot wave of fellows by the spring (with one report specifying that it’s targeting start dates by March), and for the first full cohort of 1,000 fellows by September. That schedule is possible, though it means that agencies will have to significantly improve upon the government’s average hiring and clearance timelines, which generally take several months, if not longer. There are several ways to do that.

For context, the special “Schedule A” authority that will be used to hire Tech Force fellows can theoretically be deployed very quickly, because it doesn’t require time-consuming procedures like rating and ranking of applicants that regular hiring entails. Though OPM plans to have applicants undergo a technical assessment and potentially interviews with agency leadership, those steps might conceivably add only a few weeks, or even less time if well-staffed.

As for security clearances, there’s similarly no legal barrier to them moving quickly—for example, they have sometimes been issued in a matter of weeks or even days for political appointees, such as those needed for crisis-response efforts and other urgent matters. However, the clearance timeline for the average new hire runs anywhere from two to six months or more, depending on the level of clearance needed and whether the case presents any complications, like foreign business ties, necessitating further investigation.

OPM Director Kupor has said that agencies have promised to process Tech Force fellows’ security clearances as quickly as possible. Indeed, timelines for clearances can shrink from months to days when personnel know that a particular matter is a top priority for the head of their agency or the White House, as documented by Raj Shah and Christopher Kirchhoff in their 2024 book Unit X, about the Pentagon’s elite Defense Innovation Unit.

To this end, agencies could make use of “interim” security clearances for fellows, which would allow them to begin work pending a final clearance decision in cases that don’t raise concerns upon initial review. Interim clearances can shave months off the timeline, yet agencies appear to use them unevenly, perhaps overestimating the risk of a negative final clearance decision. But if agencies are to meet the Tech Force’s ambitious goals (especially for a pilot wave of fellows as early as March), then they need to consider utilizing this tool—and personnel within the organization need to know that they have cover from their leadership in using it.

Still, other operational challenges and questions loom. While pressure from the top can accelerate hiring and clearance timelines, the agency teams tasked with fulfilling such mandates may find it difficult to maintain the pace over longer periods if they’re inadequately resourced and staffed. Furthermore, OPM has made it clear that agencies will fund Tech Force fellows and projects themselves, leaving the overall financial footing of the program unclear, and potentially delaying its actual launch at any agencies that might struggle to find available funds on relatively short notice.

Congress could bolster the Tech Force by appropriating funds to specifically support it, to include project budgets, fellows’ salaries, and the other costs associated with hiring and clearing fellows rapidly and at scale. Without dedicated appropriations, agencies may vary widely in the amount of discretionary funding that they’re able or willing to devote to the program, especially at the outset of this new and previously unaccounted-for expenditure. Congress could also pass measures aimed at increasing the ability of federal hiring teams to assess AI talent, like those in the bipartisan “AI Talent” bill introduced on December 10. Building up this type of AI-enabling talent would help agencies work efficiently in selecting and hiring the right technical expertise, for both the Tech Force and other similar hiring efforts.

Additionally, given OPM’s statements that hiring of Tech Force fellows will be conducted directly by agencies, it remains to be seen how exactly OPM will ensure that agencies don’t waste resources—including valuable time—competing over the same candidates. Within the private sector, competition for AI talent is fierce, and that dynamic seems likely to affect the Tech Force as well. The Tech Force job vacancies posted so far note that they’re “shared announcements” from which various agencies may hire, and suggest that it’ll be up to the agencies to decide which candidates to interview and make offers to. OPM should play a robust coordinating role here so that smaller or less well-known agencies aren’t disadvantaged in attracting and securing sufficient qualified hires. Based on the scale of the program, OPM might even consider a model like the “medical match” system used to pair medical students with residency programs, to help both applicants and agencies weigh their options and needs in a more efficient and organized manner.

Finally, the public-private structure of the program could present some challenges. As full-time federal employees, Tech Force fellows will be subject to the generally applicable conflict of interest rules, which prohibit government employees from receiving outside compensation, or from accessing information or taking action that could unduly benefit themselves or closely related parties financially. Given such rules, the Tech Force website notes that participants nominated by partner companies are expected to take unpaid leave or to separate from their private-sector employers while working for the government. Even still, conflict of interest rules can complicate federal service for tech-company employees, who often have deferred compensation packages (e.g., restricted stock units, options) that vest over time, perhaps several years in the future.

The Tech Force website says that it “expect[s]” that fellows, including those nominated for the program by partnering companies, will be able to retain any deferred compensation packages, though it mentions that companies will need to review details on a case-by-case basis to determine whether any such compensation must be suspended while an individual remains in the program. It bears noting that agencies’ ethics offices may also have to review potential conflicts on a case-by-case basis as they arise, with an eye to the details of the particular financial interest and government matter at issue. Because of the fact-specific nature of that analysis, it’s hard to generalize the result, and the answers could morph over time as projects and financial situations change during the course of government service.

Without greater clarity on whether and how the government can consistently address the challenges raised by conflict of interest rules, the Tech Force may struggle to recruit and retain some promising candidates. This issue is perhaps most significant for the senior engineering managers (with the most compensation on the line) that the program plans to draw from private partners.

2. Ensuring the Tech Force provides long-term benefits for the government

As OPM Director Kupor acknowledges, the federal government has a problem with its early-career talent pipeline, particularly as it relates to the need for greater adoption of AI and other emerging technology. He has cast the Tech Force as part of the solution, a way to infuse the government with a new crop of tech-savvy employees who will lend their expertise to projects of national scope and, in the process, discover that federal service can indeed provide interesting and valuable experience. But it’s unclear at this point how the Tech Force—with its standard two-year service term—will translate to enduring change in the makeup of the federal workforce, or raise the overall level of technological uptake across government. To do so, program leadership could pursue two additional steps.

First, the White House could consider issuing an executive order granting “non-competitive eligibility” (NCE) to Tech Force fellows who successfully complete the program. NCE status allows individuals to be hired for competitive-service positions (which comprise the majority of federal civilian hiring) without having to compete against applicants from the general public. Thus, an individual with NCE status can be hired much more quickly than would otherwise be the case, assuming of course that they meet the qualifications of the position. For any Tech Force fellows interested in continuing their government service after they complete the program, NCE status would likely significantly streamline the process of obtaining another federal position, and ensure that the government doesn’t lose proven talent that’s eager to stay on. The Tech Force website in fact recognizes that some fellows may apply for continued federal service following the end of the program, and so granting NCE status to successful participants is in sync with its goals.

NCE is commonly granted to the alumni of federal programs like the Peace Corps, Fulbright Scholarship, and AmeriCorps VISTA, making it a natural fit for the Tech Force, not least because of its emphasis on early-career talent. NCE is typically valid for between one and three years following successful service completion, though the timeframe specified is entirely up to the White House’s discretion. Establishing a three-year NCE period for Tech Force alumni (versus one or two years) would give individuals the option of pursuing meaningful professional experiences outside of government before potentially returning for another stint. Likewise, it would give the government a broader window in which it might recoup its investment in training previous Tech Force talent.

Second, Tech Force leadership might consider expanding the program to include some opportunities for existing government employees to serve one- to two-year terms in the private sector. The Tech Force’s private-sector partners provide a potential ready-made source of such opportunities, and companies might be amenable given that they will lose some of their own employees and managers to the program for similar periods. For the federal government, making the Tech Force a two-way exchange (even in numbers much more modest than the Tech Force’s 1,000 fellows) would amplify the government’s access to knowledge and experience regarding AI and other emerging tech, beyond the Tech Force teams and projects themselves. That might be valuable insofar as the Tech Force teams could end up being quite insular given their direct reporting line to agency heads.

This sort of science-and-technology exchange program already has some precedent at federal agencies, and would increase diffusion of the Tech Force’s capacity-building benefits throughout the federal workforce. Upon their return to federal service, government employees might disseminate lessons and approaches from the private sector among their colleagues and teams. Furthermore, because the Tech Force’s team leaders will be drawn (perhaps exclusively) from private-sector partners, a two-way exchange could be a way to give existing government tech managers important experience, ultimately providing agencies with a deeper bench of mid- and senior-career talent.

AI Will Automate Compliance. How Can AI Policy Capitalize?

Disagreements about AI policy can seem intractable. For all of the novel policy questions that AI raises, there remains a familiar and fundamental (if contestable) question of how policymakers should balance innovation and risk mitigation. Proposals diverge sharply, ranging from, at one pole, pausing future AI development to, at the other, accelerating AI progress at virtually all costs.

Most proposals, of course, lie somewhere between, attempting to strike a reasonable balance between progress and regulation. And many policies are desirable or defensible from both perspectives. Yet, in many cases, the trade-off between innovation and risk reduction will persist. Even individuals with similar commitments to evidence-based, constitutionally sound regulations may find themselves on opposite sides of AI policy debates given the evolving and complex nature of AI development, diffusion, and adoption. Indeed, we, the authors, tend to locate ourselves on generally opposing sides of this debate, with one of us favoring significant regulatory interventions and the other preferring a more hands-off approach, at least for now.

However, the trade-off between innovation and regulation may not remain as stark as it currently seems. AI promises to enable the end-to-end automation of many tasks and reduce the costs of others. Compliance tasks will be no different. Professor Paul Ohm recognized as much in a recent essay. “If modest predictions of current and near-future capability come to pass,” he expects that “AI automation will drive the cost of regulatory compliance” to near zero. That’s because of the suitability of AI tools to regulatory compliance costs. AI systems are already competent at many forms of legal work, and compliance-related tasks tend to be “on the simpler, more rote, less creative end of the spectrum of types of tasks that lawyers perform.”

Delegation of such tasks to AI may even further the underlying goals of regulators. As it stands, many information-forcing regulations fall short of expectations because regulated entities commonly submit inaccurate or outdated data. Relatedly, many agencies lack the resources necessary to hold delinquent parties accountable. In the context of AI regulations, AI tools may aid both in the development of and compliance with several kinds of policies including but not limited to adoption and ongoing adherence to cybersecurity safeguards, adherence to alignment techniques, evaluation of AI models based on safety-relevant benchmarks, and completion of various transparency reports.

Automated compliance is the future. But it’s more difficult to say when it will arrive, or how quickly compliance costs are likely to fall in the interim. This means that, for now, difficult trade-offs in AI policy remain: in some cases, premature or overly burdensome regulation could stifle desirable forms of AI innovation. This would not only be a significant cost in itself, but would also postpone the arrival of compliance-automating AI systems, potentially trapping us in the current regulation–innovation trade-off. How, then, should policymakers respond?

We tackle this question in our new working paper, Automated Compliance and the Regulation of AI. We sketch the contours of automated compliance and conclude by noting several of its policy implications. Among these are some positive-sum interventions intended to enable policymakers to capitalize on the compliance-automating potential of AI systems while simultaneously reducing the risk of premature regulation.

Automatable Compliance—And Not

Before discussing policy, however, we should be clear about the contours and limits of (our version of) automatable compliance. We start from the premise that AI will initially excel most at computer-based tasks. Fortunately, many regulatory compliance tasks fall in this category, especially in AI policy. Professor Ohm notes, for example, that many of the EU AI Act’s requirements are essentially information processing tasks, such as compiling information about the design, intended purpose, and data governance of regulated AI systems; analyzing and summarizing AI training data; and providing users with instructions on how to use the system. Frontier AI systems already excel at these sorts of textual reasoning and generation tasks. Proposed AI safety regulations or best practices might also require or encourage the following:

- Automated red-teaming, in which an AI model attempts to discover how another AI system might malfunction.

- Cybersecurity measures to prevent unauthorized access to frontier model weights.

- Implementation of automatable AI alignment techniques, such as Constitutional AI.

- Automated evaluations of AI systems on safety-relevant benchmarks.

- Automated interpretability, in which an AI system explains how another other AI model makes decisions in human-comprehensible terms.

These, too, seem ripe for (at least partial) automation as AI progresses.

However, there are still plenty of computer-based compliance tasks that might resist significant automation. Human red-teaming, for example, is still a mainstay of AI safety best practices. Or regulation might simply impose a time-based requirement, such as waiting several months before distributing the weights of a frontier AI model. Advances in AI might not be able to significantly reduce the costs associated with these automation-resistant requirements.

Finally, it’s worth distinguishing between compliance costs—“the costs that are incurred by businesses . . . at whom regulation may be targeted in undertaking actions necessary to comply with the regulatory requirements”—and other costs that regulation might impose. While future AI systems might be able to automate away compliance costs, firms will still face opportunity costs if regulation requires them to reallocate resources away from their most productive use. While such costs will sometimes be justified by the benefits of regulation, these costs might also resist automation.

Notwithstanding these caveats, we do expect AI to eventually significantly reduce certain compliance costs. Indeed, a number of startups are already working to automate core compliance tasks, and compliance professionals already report significant benefits from AI. However, for now, compliance costs remain a persistent consideration in AI policy debates. Given this divergence between future expectations and present realities, how should policymakers respond? We now turn to this question.

Four Policy Implications of Automated Compliance

Automatability Triggers: Regulate Only When Compliance is Automatable

Recall the discursive trope with which we opened: even when parties agree that regulation will eventually be necessary, the question of when to regulate can remain a sticking point. The proregulatory side might be tempted to jump on the earliest opportunity to regulate, even if there is a significant risk of prematurity, if they assess the risks of belated regulation to be worse. The deregulatory side might respond that it’s better to maintain optionality for now. The proregulatory side, even if sympathetic to that argument, might nevertheless be reluctant to delay if they do not find the deregulatory side’s implicit promise to eventually regulate credible.

Currently, this impasse is largely fought through sheer factional politics that often force rival interests into supporting extreme policies: the proregulatory side attempts to regulate when it can, and the deregulatory side attempts to block them. Of course, factional politics is inherent to democracy. But a more constructive dynamic might also be possible. In our telling, both the proregulatory and deregulatory sides of the debate share some important common assumptions. They believe that AI progress will eventually unlock dramatic new capabilities, some of which will be risky and others of which will be beneficial. These common assumptions can be the basis for a productive trade. The trade goes like this: the proregulatory side agrees not to regulate yet, while the deregulatory side credibly commits to regulate once AI has progressed further.

How might the proregulatory side make such a credible commitment? Obviously, one way would enact legislation effective at a future date certain, possibly several years out. But picking the correct date would be difficult given the uncertainty of AI progress. The proregulatory side will worry that that date will end up being too late if AI progresses more quickly than predicted, and vice versa for the proregulatory side.

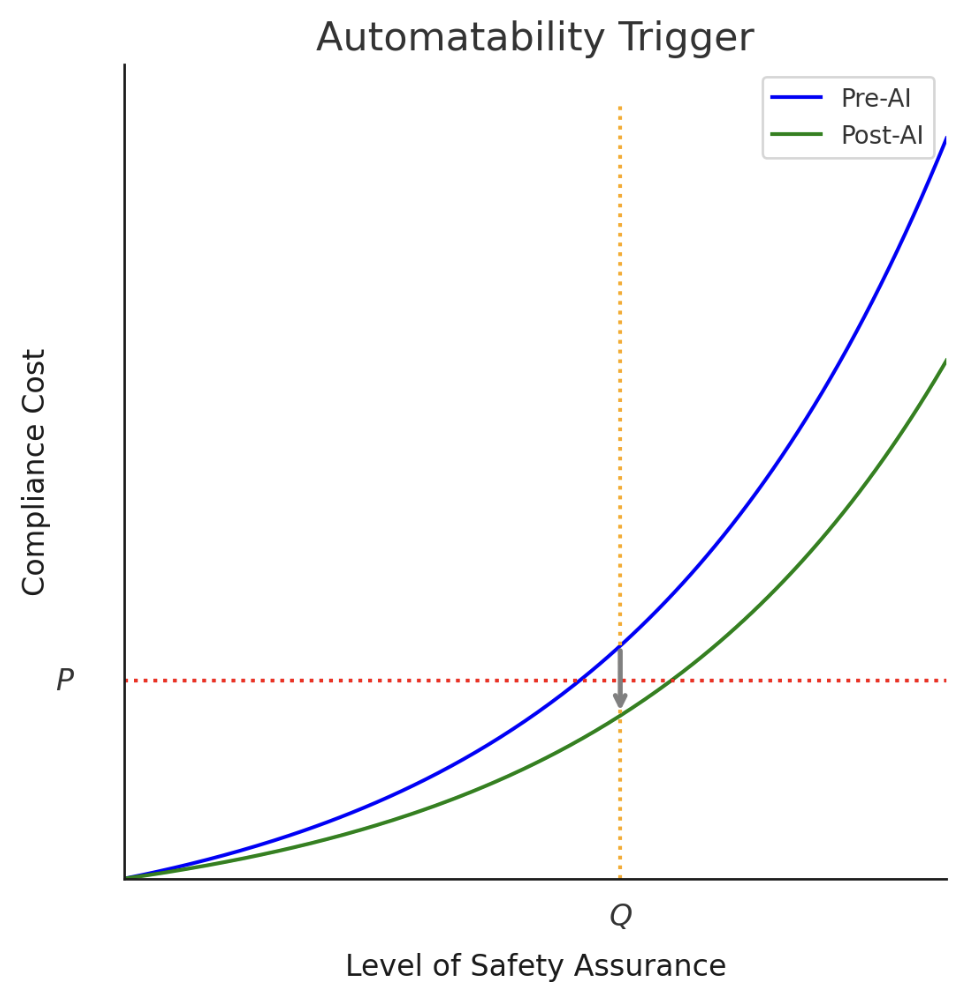

We propose another possible mechanism for triggering regulation: an automatability trigger. An automatability trigger would specify that AI safety regulation is effective only when AI progress has sufficiently reduced compliance costs associated with the regulation. Automatability triggers could take many forms, depending on the exact contents of the regulation that they affect. In our paper, we give the following example, designed to trigger a hypothetical regulation that would prevent the export of neural networks with certain risky capabilities:

The requirements of this Act will only come into effect [one month] after the date when the [Secretary of Commerce], in their reasonable discretion, determines that there exists an automated system that:

(a) can determine whether a neural network is covered by this Act;

(b) when determining whether a neural network is covered by this Act, has a false positive rate not exceeding [1%] and false negative rate not exceeding [1%];

(c) is generally available to all firms subject to this Act on fair, reasonable, and nondiscriminatory terms, with a price per model evaluation not exceeding [$10,000]; and,

(d) produces an easily interpretable summary of its analysis for additional human review.

Our example is certainly deficient in certain respects. For instance, there is nothing in that text forcing the Secretary of Commerce to make such a determination (though such provisions could be added), and a highly deregulatory administration could likely thereby delay the date of such a determination well beyond the legislators’ intent. But we think that more carefully crafted automatability triggers could bring several benefits.

Most importantly, properly designed automatability triggers could effectively manage the risks of regulating both too soon and too late. They manage the risk of regulating too soon because they delay regulation until AI has already advanced significantly: an AI that can cheaply automate compliance with a regulation is presumably quite advanced. They manage the risk of regulating too late for a similar reason: AI systems that are not yet advanced enough to automate compliance likely pose less risk than those that are, at least for risks correlated with general-purpose capabilities.

There’s also the benefit of ensuring that the regulation does not impose disproportionately high costs on any one actor, thereby preventing regulation from forming an unintentional moat for larger firms. Our model trigger, for example, specifies that the regulation is only effective when the compliance determination from a compliance-automating AI costs no more than $10,000. Critically, these triggers may also be crafted in a way that facilitates iterative policymaking grounded in empirical evidence as to the risks and benefits posed by AI. This last benefit distinguishes automatability triggers from monetary or compute thresholds that are less sensitive to the risk profile of the models in question.

Automated Compliance as Evidence of Compliance

An automatability trigger specifies that a regulation becomes effective only when there exists an AI system that is capable of automating compliance with that regulation sufficiently accurately and cheaply. If such a “compliance-automating AI” system exists, we might also decide to treat firms that properly implement such a compliance-automating AI more favorably than firms that don’t. For example, regulators might treat proper implementation of compliance-automating AI systems as rebuttable evidence of substantive compliance. Or such firms might be subject to less frequent or stringent inspections.

Accelerate to Regulate

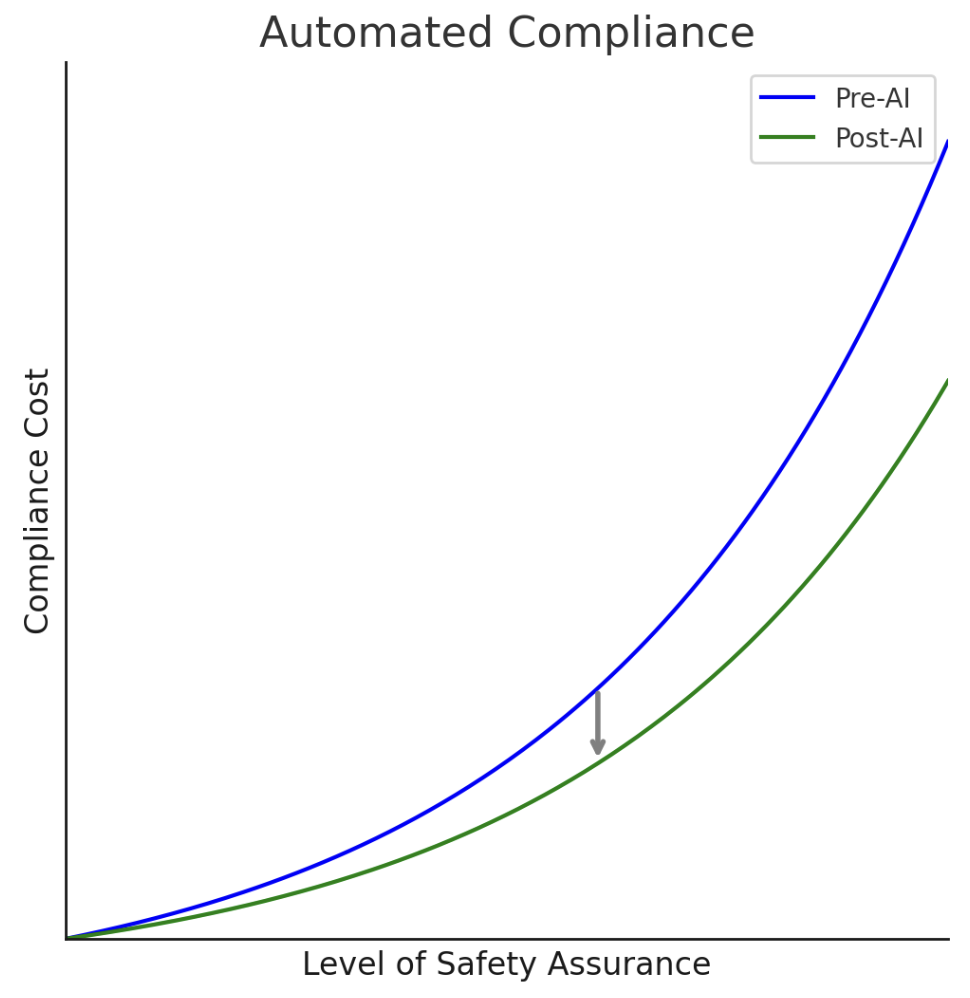

AI progress is not unidimensional. We have identified compliance automation as a particularly attractive dimension of AI progress: it reduces the cost to achieve a fixed amount of regulatory risk reduction (or, equivalently, it increases the amount of regulatory risk reduction feasible with a fixed compliance budget), thereby loosening one of the most consequential constraints on good policymaking in this high-consequence domain.

It may therefore be desirable to adopt policies and projects that specifically accelerate the development of compliance-automating AI. Policymakers, philanthropists, and civic technologists may be able to accelerate automated compliance by, for example:

- Building curated data sets that would be useful for creating compliance-automating AI systems;

- Building proof-of-concept compliance-automating AI systems for existing regulatory regimes;

- Instituting monetary incentives, such as advance market commitments, for compliance-automating AI applications;

- Ensuring that firms working on automated compliance have early access to restricted AI technologies; and

- Preferentially developing and advocating for AI policy proposals that are likely to be more automatable.

Automated Governance Amplifies Automated Compliance

Our paper focuses primarily on how private firms will soon be able to use AI systems to automate compliance with regulatory requirements to which they are subject. However, this is only one side of the dynamic: governments will be able to automate many of their core bureaucratic, administrative, and regulatory functions as well. To be sure, automation of core government functions must be undertaken carefully; one of us has recently dedicated a lengthy article to the subject. But, as with many things, the need for caution here should not be a justification for inaction or indolence. Governmental adoption of AI is becoming increasingly indispensable to state capacity in the 21st Century. We are therefore also excited about the likely synergies between automated compliance and automated governance. As each side of the regulatory tango adopts AI, new possibilities for more efficient and rapid interaction will open. Scholarship has only begun to scratch the surface of what this could look like, and what benefits and risks it will entail.

Conclusion: A Positive-Sum Vision for AI Policy

Spirited debates about the optimal content, timing, and enforcement of AI regulation will persist for the foreseeable future. That is all to the good.

At the same time, new technologies are typically positive-sum, enabling the same tasks to be completed more efficiently than before. Those of us who favor some eventual AI regulation should internalize this dynamic into our own policy thinking by carefully considering how AI progress will enable new modes of regulation that simultaneously increase regulatory effectiveness and reduce costs to regulated parties. This methodological lens is already common in technical AI safety, where many of the most promising proposals assume that future, more capable AI systems will be indispensable in aligning and securing other AI systems. In many cases, AI policy should rest on a similar assumption: AI technologies will be indispensable in the regulatory formulation, administration, and compliance.

Hard questions still remain. There may be AI risks that emerge well before compliance-automating AI systems can reduce costs associated with regulation. In these cases, the familiar tension between innovation and regulation will persist to a significant extent. However, in other cases, we hope that it will be possible to design policies that ride the production possibilities frontier as AI pushes it outward, achieving greater risk reduction at declining cost.

Healthy Insurance Markets Will Be Critical for AI Governance

An insurance market for artificial intelligence (AI) risk is emerging. Major insurers are taking notice of AI risks, as mounting AI-related losses hit their balance sheets. Some are starting to exclude AI risks from policies, creating opportunities for others to fill these gaps. Alongside a few specialty insurers, the market is frothing with start-ups—such as the Artificial Intelligence Underwriting Company (for whom I work), Armilla AI, Testudo, and Vouch—competing to help insurers price AI risk and provide dedicated AI coverage.

How this fledgling insurance market matures will profoundly shape the safety, reliability and adoption of AI, as well as the AI industry’s resilience. Will insurance supply meet demand, protecting the industry from shocks while ensuring victims are compensated? Will insurers enable AI adoption by filling the trust gap, or will third-party verification devolve into box-ticking exercises? Will insurers reduce harm by identifying and spreading best practices, or will they merely shield their policyholders from liability with legal maneuvering?

In a recent Lawfare article, Daniel Schwarcz and Josephine Wolff made the case for pessimism, arguing that “liability insurers are unlikely to price coverage for AI safety risks in ways that encourage firms to reduce those risks.”

Here I provide the counterpoint. I make the case, not for blind optimism, but for engagement and intervention. Synthesizing a large swathe of theoretical and empirical work on insurance, my new paper finds considerable room for insurers to reduce harm and improve risk management in AI. However, realizing this potential will require many pieces to come together. On this point, I agree with skeptics like Schwarcz and Wolff.

Before getting into the challenges and solutions though, it’s important to grasp some of the basic dynamics of insurance.

Insurance as Private Governance

Insurers are fundamentally in the business of accurately pricing and spreading risk, but not only that: They also manage that risk by monitoring policyholders, identifying cost-effective risk mitigations, and enforcing private safety standards. Indeed, insurers have often played a key role in the safe assimilation of new technologies. For example, when Philadelphia grew tenfold in the 1700s, multiplying the cost of fires, fire insurers incentivized brick construction, spread fire-prevention practices, and improved firefighter equipment. When electricity created new hazards, property insurers funded the development of standards and certifications for electrical equipment. When automobile demand surged after World War II, insurers funded the development of crashworthiness ratings and lobbied for airbag mandates, contributing to the 90 percent drop in deaths per mile over the 20th century.

Insurers play the role of private regulator not out of benevolence, but because of simple market incentives. There are four key dynamics to understand.

First, insurers want to make premiums more affordable in order to expand their customer base and seize market share. Generally reducing risks is the most direct way to reduce premiums.

Second, insurers want to control their losses. Once insurers issue policies, they directly benefit from any further risk reductions. Encouraging policyholders to take cost-effective mitigations and monitoring them to ensure they don’t take excessive risks directly protects insurers’ balance sheets. Examples of this from auto insurance include safety training programs and telematics. The longer-term investments insurers make in safety research and development (R&D)—such as car headlight design—allow them to profit from predictable reductions in the sum and or volatility of their losses. Insurance capacity—the amount of risk insurers can bear—is a scarce resource, ultimately limited by available capital: Highly volatile losses strain this capacity by requiring insurers to hold larger capital buffers.

Third, insurers want to be partners to enterprise. Risk management services (such as cybersecurity consulting) are often a key value proposition for large corporate policyholders, and they help insurers to differentiate themselves. Insurers can also enable companies to signal product quality and trustworthiness more efficiently, through warranties, safety certificates, and proofs of insurance. This is precisely what’s driving the boom in start-ups competing to provide insurance against AI risk: filling the large trust gap between (often young) vendors of cutting-edge AI technology and wary enterprise clients struggling to assess the risks of an unproven technology.

Fourth and finally, insurers seek “good risk.” Underwriting fundamentally involves identifying profitable clients while avoiding adverse selection (where insurers attract and misprice too many high-risk clients). This requires understanding the psychologies, cultures, and risk management practices of potential clients. For example, before accepting a new client, cyber insurance underwriters will make an extensive assessment of the client’s cybersecurity posture.

Insurers deploy various tools to achieve these aims: adherence to safety standards as a condition of coverage, risk-adjusted premiums rewarding safer practices, audits or direct monitoring of policyholders, and refusing to pay claims if the policyholder violated the terms of the contract (such as by acting with gross negligence or recklessness).

Are these tools effective, though? Does insurance uptake really reduce harm relative to a baseline where insurers are absent?

Moral Hazard vs. the Distorted Incentives of AI Firms

Skeptics of “regulation by insurance” point out that the default outcome of insurance uptake is moral hazard—that is, insureds taking excessive risk, knowing they are protected. From this angle, the efforts insurers make to regulate insureds are just a Band-Aid for a problem created by insurance.

These skeptics have a point: Moral hazard is a danger. Nevertheless, insurers can often improve risk management and reduce harm, despite moral hazard. My research finds this happens when the incentives for insureds to take care were already suboptimal: Insurance essentially acts as a corrective for many types of market failures.

Consider fire insurance again: Making a house fire-resistant protects not just that one house but also neighboring ones. However, individual homeowners don’t see these positive externalities: They are underincentivized to make such investments in fire safety. By contrast, the insurer that covers the entire neighborhood (or even just most of it) captures much more of the total benefit from these investments. It frequently happens that insurers are thus better placed to provide what are essentially public goods.

Are frontier AI companies such as OpenAI, Anthropic, or Google DeepMind sufficiently incentivized to take care? Common law liability makes a valiant attempt to do so, but as I and others point out, it is not up to the task for several reasons.

First, these companies are locked in a winner-take-most race for what could quickly become a hundred-billion- or multitrillion-dollar market, creating intense pressure to prioritize increasing AI capabilities over safety. This is especially true for start-ups that are burning capital at extraordinary rates while promising investors extremely aggressive revenue growth.

Second, safety R&D suffers from a classic public goods problem: Each company bears the full cost of such R&D, but competitors capture much of the benefit through spillovers. This leads to chronic underinvestment in a wide range of open research questions, despite calls from experts and nonprofits.

Third, the prospect of an AI Three Mile Island creates a free-rider problem. Nuclear’s promise of abundant energy died for a generation after accidents such as Three Mile Island and Chernobyl fueled public backlash and regulatory scrutiny. Similarly, if one AI company accidentally causes an AI Three Mile Island, the entire industry would suffer. But while all these firms benefit from others investing in safety, each prefers to freeride.

Fourth, a large enough catastrophe or collapse in investor confidence will render AI companies “judgment-proof”—that is, insolvent and unable to pay the full amount of damages for which they are liable. Victims (and or taxpayers) will be left to foot the bill, essentially subsidizing the risks these companies are taking.

Fifth is the lack of mature risk management in the frontier AI industry. A wealth of research finds that individuals and young organizations systematically neglect low-probability, high-consequence risks. This is compounded by the overconfidence, optimism, and “move fast and break things” culture typical of start-ups. Also likely at work is a winner’s curse: It’s likely the AI company most willing to race ahead most underestimates the tail-risks.

Insurance uptake helps correct these misaligned incentives by involving seasoned stakeholders who don’t face the same competitive dynamics, are required by law to carry substantial capital reserves for tail-risks, and, again, are better placed to provide public goods.

Admittedly, history proves these beneficial outcomes are possible, not a given. There are still further challenges that skeptics rightly point to and which must be overcome if insurance is to be an effective form of private governance. I turn to these next.

Pricing Dynamic Risk

It is practically a truism to say AI risk is difficult to insure given the lack of data on incidents and losses. This is distracting and misleading. Distracting because trivially true. Every new risk has no historical loss data: That says nothing of how well or poorly insurers will eventually price and manage it. Misleading because compared to, say, commercial nuclear power risk when it first appeared, data is intrinsically easier to acquire for AI risks: Unlike nuclear power plants, it’s possible to stress-test live AI systems quite cheaply (known as “red–teaming”). Other key data points, such as the cost of an intellectual property lawsuit or public relations scandal, are simply already known to insurers.

The dynamic nature of AI risk is the warranted concern. Because the underlying technology is evolving so rapidly, insurers could struggle to get a handle on it: Information asymmetries between insurers and their policyholders (especially if these last are AI developers) could remain large; lasting mitigation strategies will be difficult to identify; and the actuarial models that insurers traditionally rely on, which assume historical losses predict future ones, may not hold up.

This mirrors difficulties insurers faced with cyber risk, which stemmed from rapid technological evolution and intelligent adversaries adapting their strategies to thwart defenses. AI risk will include less of this adversarial element, at least where AI systems aren’t scheming against their creators.

Cyber insurers have recently started overcoming this information problem. Instead of relying solely on policyholders self-reporting their cybersecurity posture through lengthy, annual questionnaires, insurers now continuously scan policyholders’ vulnerabilities and security controls. This was enabled by so-called insurtech innovations and partnerships with major cloud service providers that already have access to much of the information on policyholders that insurers need. Insurers have also come to a consensus on mandating certain security controls, such as multi-factor authentication and endpoint detection, demonstrating that durable mitigations can be found.

For the AI insurance market to go well, insurers must learn the lessons of cyber. They must prepare from the start to use pricing and monitoring techniques, such as the aforementioned red-teaming, that are as adaptive as the technology they are insuring. They should also aim to simply raise the floor by mandating adherence to a robust safety and security standard before issuing a policy. Standardizing and sharing incident data will also be critical.

Even if insurers fail to price individual AI systems accurately, insurers can still help correct the distorted incentives of AI companies, as long as aggregate pricing is good enough. To illustrate: Pricing difficulties notwithstanding, aggregate loss ratios for cyber are well controlled, making it a profitable line of insurance. This speaks to the effectiveness of risk proxies such as company size, deployment scale, and economic sector. When premiums depend only on these factors, ignoring policyholders’ precautionary efforts, insurers lose a key tool for incentivizing good behavior. However, premiums will still track activity levels, a key determinant of how much risk is being taken. Excessive activity will be deterred by premium increases. Thus even with crude pricing, by drawing large potential future damages forward, insurers can help put brakes on the AI industry’s race to the bottom: The industry as a whole will be that much better incentivized to demonstrate their technology is safe enough to continue developing and deploying at scale.

Removing the Wedge Between Liability and Harm

For insurers covering third-party liability, sometimes lawyers are a safer investment than investments in safety.

We’ve occasionally seen this dark pattern in cyber insurance, where, in response to incidents, sometimes insurers provide lawyers who prevent outside forensics firms from sharing findings with policyholders to avoid creating evidence of negligence. This actively hampers institutional learning. The risk of liability may decrease, but the risk of harm increases.

The only real remedy is policy intervention, in the form of transparency requirements and clearer assignment of liability. Breach notification laws and disclosure rules are successful examples in cyber: With less room to bury damning incidents or poor security hygiene, insurers and policyholders have refocused their efforts on mitigating harms.

California’s recently passed Transparency in Frontier Artificial Intelligence Act is therefore a step in the right direction. The act creates whistleblower protections and requires major AI companies to report to the government what safeguards they have in place. Even skeptics of regulation by insurance and proponents of a federal preemption of state AI laws, recognize the value of such transparency requirements.

A predecessor bill that was vetoed last year would have taken this further by more clearly assigning liability to foundation model developers for certain catastrophic harms. The question of who to assign liability to has been discussed in Lawfare and elsewhere; at issue here is how it gets assigned. By removing the need to prove negligence, a no-fault liability regime for such catastrophes would eliminate legal ambiguity altogether, mirroring liability for other high-risk activities such as commercial nuclear power and ultra-hazardous chemical storage. This would focus insurer efforts on pricing technological risk and reducing harm, rather than pricing legal risk and shunting blame around.

Workers’ compensation laws from the 20th century were remarkably successful in this regard. The Industrial Revolution brought heavy machinery and, with it, a dramatic rise in worker injury and death. Once liability was clearly assigned to employers in the 1910s though, insurers’ inspectors and safety engineers got to work bending the curve: improvements in technology and safety practices produced a 50 percent reduction in injury rates between 1926 and 1945.

Catastrophic Risk: Greatest Challenge, Greatest Opportunity

Nowhere are the challenges and opportunities of this insurance market more stark than with catastrophic risks. Both experts and industry warn of frontier AI systems potentially enabling bioterrorism, causing financial meltdowns, or even escaping the control of their creators and wreaking havoc on computer systems. If even one of these risks is material, the potential losses are staggering. (For reference, the NotPetya cyberattack of 2017 cost roughly $10 billion globally; major IT disruptions such as the 2024 CrowdStrike outage cost some tens of billions globally; the coronavirus pandemic is estimated to have cost the U.S. alone roughly $16 trillion.)

Under business as usual, insurers face silent, unpriced exposure to these risks. Few are the voices sounding the alarm. We may therefore see a sudden market correction, similar to terrorism insurance post-9/11: After $32.5 billion in losses, insurers swiftly limited terrorism risk coverage or exited the market altogether. With coverage unavailable or prohibitively expensive, major construction projects and commercial aviation ground to a halt since bank loans often require carrying such insurance. The government was forced to stabilize the market, providing insurance or reinsurance at subsidized rates. It’s entirely possible an AI-related catastrophe could similarly freeze up economic activity if AI risks are suddenly excluded by insurers.

Silent coverage aside, insurers don’t have the risk appetite to write affirmative coverage for AI catastrophes. The likes of OpenAI and Anthropic already can’t purchase sufficient coverage, with insurers “balking” at their multibillion-dollar lawsuits for harms far smaller than those experts warn might come. Such supply-side failures leave both the AI industry and the broader economy vulnerable.

An enormous opportunity is also at stake here. Counterintuitively, it is precisely these low-probability, high-severity risks that insurers are well-suited to handle. Not because risk-pooling is very effective for such risks—it isn’t—but because, when insurers get serious skin in the game for such risks, they are powerfully motivated to invest in precisely the efforts markets are currently failing to invest in: forward-looking causal risk modeling, monitoring policyholders, and mandating robust safeguards. For catastrophic risks, these efforts are the only effective method for insurers to control the magnitude and volatility of losses.

Such efforts are on full display in commercial nuclear power. Insurers supplement public efforts with risk modeling, safety ratings, operator accreditation programs, and plant inspections. America’s nuclear fleet today stands as a remarkable achievement of engineering and management: Critical safety incidents have decreased by over an order of magnitude, while energy output per plant has increased, in no small part thanks to insurers.

Put another way, insurers are powerfully motivated to pick up the slack from poorly incentivized AI companies. The challenge of regulating frontier AI can be largely outsourced to the market, with the assurance that if risks turn out to be negligible, insurers will stop allocating so many resources to managing them.

Clearly delegating to insurers the task of pricing in catastrophic risk from AI also helps by simply directing their attention to the issue. My research finds that insurers price catastrophic risk quite effectively when they cover it knowingly, even when it involves great uncertainty. To reuse the example above, commercial nuclear insurance pricing was remarkably accurate at least as early as the 1970s, despite incredibly limited data. Insurers estimated the frequency of serious incidents at roughly 1-in-400 reactor years, which turned out to be within the right order of magnitude; the same can’t be said of the 1-in-20,000 reactor years estimate from the latest government report at the time.

This suggests table-top exercises or scenario modeling—such as those mandated by the Terrorism Risk Insurance Program—are particularly high-leverage interventions. By simply surfacing threat vectors and raising the salience of catastrophe scenarios, these turn unknown unknowns into at least known unknowns, which insurers can work with.

Alerting insurers to catastrophic AI risk is not enough however. They will simply write new exclusions, and the supply of coverage will be lacking or unaffordable. In response, major AI companies will likely self-insure through pure captives—that is, subsidiary companies that insure their parent companies. Fortune 50 companies such as Google and Microsoft already do this. Smaller competitors would be left out in the cold, exposed to risk or paying exorbitant premiums.

Pure captives also sacrifice nearly all potential for private governance here: They do nothing to solve the industry’s various legitimate coordination problems, such as preventing an AI Three Mile Island; and they lack sufficient independence to be a real check on the industry.

Mutualize: An Old Solution for a New Industry

To recap: Under business as usual, coverage for catastrophic AI will be priced all wrong, and will face both supply and demand failures; yet this is precisely where the opportunity for private governance is greatest.

There is an elegant, tried-and-true solution to these problems: The industry could form a mutual, a nonprofit insurer owned by its policyholders. AI companies would be insuring each other, paying premiums based on their risk profiles and activity levels. Historically, it is mutuals that have the best track record of matching effective private governance with sustainable financial protection. They coordinate the industry on best practices, invest in public goods such as safety R&D, and protect the industry’s reputation through robust oversight, often leveraging peer pressure. Crucially, mutuals have sufficient independence from policyholders to pull this off: No single policyholder has a monopoly over the mutual’s board.

The government can encourage mutualization by simply giving its blessing, signaling that it won’t attack the initiative. In fact, the McCarran-Ferguson Act already shields insurers from much federal anti-trust law, though not overt boycott: The mutual cannot arbitrarily exclude AI companies from membership.

If mutualization fails and market failures persist, the government could take more aggressive measures. It could mandate carrying coverage for catastrophic risk, and more or less force insurers to offer coverage through a joint-underwriting company. These are dedicated risk pools offering specialized coverage where it is otherwise unavailable. This intervention (or the threat of it) is the stick to the carrot of mutualization: Premiums would undoubtedly be higher and relationships more adversarial. Still, it would achieve policy goals. It would protect the AI industry from shocks, ensure victims are compensated, and develop effective private governance.

Whether a mutual or a joint-underwriting company, the idea is to create a dedicated, independent private body with both the leverage and incentives to robustly model, price, and mitigate covered risks. Even the skeptics of private governance by insurance agree that this works. Again, nuclear offers a successful precedent: Some of its risks are covered by a joint-underwriting company, American Nuclear Insurers; others, by a mutual, Nuclear Electric Insurance Limited. Both are critical to the overall regulatory regime.

Public Policy for Private Governance

Both skeptics and proponents of using insurance as a governance tool agree: It won’t function well without public policy nudges. This market needs steering. Light-touch interventions include transparency requirements, clearer assignment of liability, scenario modeling exercises, and facilitating information-sharing between stakeholders. Muscular interventions include insurance mandates and government backstops for excess losses.

Backstops, a form of state-backed insurance, make sense only for truly catastrophic risks. These are risks the government is always implicitly exposed to: It cannot credibly commit not to provide disaster relief or bailouts to critical sectors. Major AI developers may be counting on this. Instead of an ambiguous subsidy in the form of ad hoc relief, an explicit public-private partnership allows the government to extract something in return for playing insurer of last resort. Intervening on the insurance market has the benefit of avoiding picking winners or losers (in contrast to taking an equity stake in any particular AI firm).

A backstop also creates the confidence and buy-in the private sector needs to shoulder more risk than it otherwise would. This is precisely what the Price-Anderson Act did for nuclear energy, and the Terrorism Risk Insurance Act did for terrorism risk. Price-Anderson even generated (modest) revenue for the government through indemnification fees.

Major interventions require careful design of course. Poorly structured mandates could simply prop up insurance demand or create a larger moat for well-resourced firms. Ill-conceived backstops could simply subsidize risk-taking. On the other hand, business as usual carries its own risks. It leaves the economy vulnerable to shocks, potential victims without a guarantee they will be made whole, and private governance to wither on the vine or, worse, to perversely pursue legal over technological innovation.

The stakes are high then, and early actions by key actors—governments, insurers, underwriting start-ups, major AI companies—could profoundly shape how this nascent market develops. Nothing is prewritten: History is full of cautionary tales as well as success stories. Steering toward the good will require a mix of deft public policy, risk-taking, technological innovation, and good-faith cooperation.

xAI’s Trade Secrets Challenge and the Future of AI Transparency

xAI is challenging a California state law that took effect at the beginning of this year, requiring xAI and other generative AI developers who provide services to Californians to publicly disclose certain high-level information about the data they use to train their AI models.

According to its drafters, the law aims to increase transparency in AI companies’ training data practices, helping consumers and the broader public identify and mitigate potential risks and use cases associated with AI. Supporters of this law view it as an important step toward a more informed public. Detractors view it as innovation-stifling. Other developers, including Anthropic and OpenAI, have already released their training data summaries in compliance with the new law.

xAI challenges AB-2013 on the grounds that it would force it to disclose its proprietary trade secrets, thereby destroying their economic value, in violation of the Fifth Amendment Takings Clause. It also claims that the law constitutes compelled speech in violation of the First Amendment and is unconstitutionally vague because it does not provide sufficient detail on how to comply. In this note, I focus on the trade secrets claim.

At the core of this dispute lies a tension between the values of commercial secrecy and transparency. In other industries and contexts, this tension is a familiar one: a company develops commercially valuable information – a recipe, a special sauce, or a novel way to produce goods efficiently – that it wishes, for good reason, to keep secret from competitors; at the same time, consumers of that company’s goods or services wish to know, for good reason, the nature and risks of what they are consuming. Sometimes, there is an overlap between the secrets a company wishes to keep and the information the public wishes to know. When that happens, the law plays an important role in resolving that tension one way or the other. How it does so can be as much a political question as a legal one.

This is what AB-2013 and xAI’s challenge to it is about. The AI industry is highly competitive, and companies have a legitimate interest in protecting any hard-won competitive edge that their secret methods provide. At the same time, the public has many unanswered questions about the nature of these services, which are increasingly embedded in their lives. There are weighty principles on either side. The outcome of this dispute could shape the legal treatment of these competing interests for years to come.

What does AB-2013 ask for?

Under section 3111a of AB-2013, developers must disclose a “high-level summary” of aspects of their training data, including of:

- The sources or owners of the datasets.

- The purpose and methods of collecting, processing, and modifying datasets.

- Descriptions of their data points, including what kinds of data are being used and the scale of the datasets.

- Whether personal information or aggregate consumer information is being used for training.

- Whether datasets include third-party intellectual property, including copyright and patent materials.

- The date ranges of when the datasets were used.

The law came into effect on the 1st of January this year. It applies retroactively to datasets of models released on or after January 1, 2022.

There are a few bespoke exceptions to this bill for particular AI models, namely those used for security and integrity purposes, for the operation of aircraft, and for national security, military, or defense purposes. There are, however, no exceptions for information that constitutes a trade secret.

What is a trade secret?

The crux of xAI’s position is that complying with AB-2013 would force it to reveal its trade secrets.

Broadly, a trade secret is any information that a company has successfully kept secret from competitors, and that confers a competitive advantage because of its secrecy. In other words, it must both be a secret in fact and generate independent economic value as a result of that secrecy. Trade secrets receive protection under state and federal law, and since the US Supreme Court’s 1984 decision in Ruckelshaus v Monsanto Co., they can constitute property protected by the Fifth Amendment’s Takings Clause.

While in principle the definition of a trade secret is broad enough to encompass virtually any information that meets its criteria, it is easier to claim trade secrecy for specific ‘nuts and bolts’ information, such as particular manufacturing instructions or the specific recipe for a food product. This is because revealing those details directly enables competitors to replicate them. Conversely, claims for general and abstract information are harder to establish because they tend to give less away about a company’s internal strategies. This is relevant to AB-2013, since it requires only a “high-level summary” of the disclosure categories.

Before applying this to xAI’s claim, it is important to note that regulations restricting the scope and protection of trade secrets are not necessarily unconstitutional. Constitutional doctrine balances trade secret protection against other interests, including the state’s inherent authority to regulate its marketplace by imposing conditions on companies that wish to participate in it. In some circumstances, disclosure of trade secrets may be one such condition.

Against this backdrop, xAI brings two trade secret challenges against AB-2013:

- First, it claims that AB-2013 constitutes a “per se” taking, meaning that the government is outright appropriating its property without compensation.

- Second, it claims that AB-2013 constitutes a regulatory taking, meaning that the government is imposing unjustified conditions on its property that substantially undermine its economic value to xAI.

xAI’s first claim: AB-2013 is a per se taking

xAI’s per se takings challenge is its most aggressive and atypical. Traditionally, this type of claim applies to government actions that would assume control or possession of tangible property, for example, to build a road through a person’s land. A per se taking can also occur when regulations would totally prevent an owner from using their property.

The court will need to consider first, whether AB-2013 targets xAI’s proprietary trade secrets and second, whether the law would appropriate or otherwise eviscerate xAI’s property interest in them. To my knowledge, no one has ever successfully argued a per se taking in the context of trade secrets, and there are good reasons to think xAI will not be the first.

(a) Does AB-2013 target xAI’s proprietary trade secrets?

xAI claims that through significant research and development, it has developed novel methods for using data to train its AI models, and that the secrecy of this information is paramount to its competitive advantage. It claims that its trade secret lies in the strategies and judgments xAI makes about which datasets to use and how to use them. To demonstrate the importance of secrecy, xAI cites various security protocols and confidentiality obligations it imposes internally to protect this information from getting out.

Given that the information in question remains undisclosed, it is difficult to assess the value and status of the information that xAI is required to disclose. We can reasonably assume that xAI does indeed possess some genuinely valuable secrets about how to effectively and efficiently approach training data. Yet it is much less clear whether any such secrets are implicated by the high-level summary required by AB-2013.

For example, suppose that xAI has developed a specific novel heuristic for curating and filtering datasets that allows it to achieve a particular capability more efficiently than publicly known methods. It could still disclose the more general fact that its datasets are curated and filtered, without jeopardizing the secrecy of that particular heuristic. Likewise, perhaps a specific method for allocating datasets between pre-training and post-training constitutes a trade secret. AB-2013 does not ask what the specific allocation method is. To that end, if xAI’s disclosure were comparable in scope to those of OpenAI and Anthropic, it would be highly unusual for this degree of detail about a company to constitute a trade secret.

Yet, this is precisely what xAI must demonstrate. To constitute a per se taking, it is not enough that disclosure provides clues about underlying secrets or even that it partially reveals them. xAI must show a more direct connection between the disclosure categories and their trade secrets.

(b) Does AB-2013 appropriate xAI’s proprietary trade secrets?

If the above analysis is correct, xAI will struggle at this second stage to show that disclosure would constitute a categorical appropriation or elimination of all economically beneficial value in the relevant property. If xAI lacks a discrete property interest in the disclosable information, it is hard to envision a court finding that AB-2013 would nevertheless indirectly appropriate some other property interest.

There are a few additional issues to mention. For one, unlike in a classic per se takings claim, here the claimed property would be extinguished by the law, rather than transferred to the control or possession of another entity. This is for the simple reason that, like ordinary secrets, a trade secret ceases to exist (ceases to be a secret) if it is publicly known. Since AB-2013 would destroy any trade secrecy in the disclosable information, the application of traditional takings analysis is a bit awkward.

Further, California can argue that AB-2013 is a conditional regulation: it requires disclosure only as a condition for developers operating in the California marketplace, and developers may choose whether to do so. This makes it seem less like an outright taking by the government and more like a quid pro quo that companies may choose to engage in voluntarily.

However, this argument is considerably weaker with respect to AB-2013’s retroactive application to services provided since 2022, as companies affected by that clause cannot now choose to opt out. This raises a further question: whether these regulations were foreseeable, or whether xAI had a reasonable expectation that they would not be introduced. That question is central to the second claim advanced by xAI, and I will analyze it below.

xAI’s second claim: that AB-2013 is a regulatory taking

xAI’s second argument is more orthodox. xAI argues that, even if AB-2013 is not an outright appropriation of its trade secrets, it imposes regulations that so significantly interfere with them as to amount to a taking. This argument avoids some of the hurdles of the first: it does not require that AB-2013 completely eviscerate the claimed trade secret, and there are several precedents in which this argument has been successfully made.

To determine the constitutionality of AB-2013, the court will balance the following factors established in Penn Central:

(a) The economic damage that xAI would suffer by complying with the law;

(b) The character of the government action, including the public purpose that disclosure is intended to serve; and

(c) Whether xAI had a reasonable investment-backed expectation that it would not be required to disclose this information at the time that it developed it.

(a) Economic damage to xAI

As noted above, the present information asymmetry makes it difficult to assess the harm disclosure would cause to xAI, and there are reasons to be skeptical that a high-level summary would meaningfully disadvantage xAI. Nevertheless, let’s assume that compliance would indeed destroy something valuable to xAI. In that case, the state would need to justify this disadvantage to xAI on further grounds.

(b) The character of the government action

As noted, states have the authority to regulate their marketplaces. This gives them some scope to regulate trade secrets in the service of a legitimate public interest. The public interest in the disclosable information is therefore key to California’s defense of AB-2013.

While xAI emphasizes the disadvantages that disclosure would cause for its business, it discredits the public interest in this information. It questions why the public needs to know these details and argues that they would be largely unintelligible and uninteresting.

Despite what xAI suggests, there are reasons to be interested in disclosable information, both for direct consumers and for researchers, journalists, and other third parties who could use it to enhance public understanding. For example:

- Whether a model is trained on proprietary, personal, or aggregate consumer information can help users understand the legal and ethical implications of using such models and enable them to make educated choices among their options.

- Understanding the sources, purposes, and types of training data may help identify the biases and limitations of particular models and appropriate use cases.

- The date ranges of datasets can help identify gaps in a model’s capabilities and areas in which its responses may rely on outdated data.

- Information about training data sources can be used to assess the risk of data poisoning attacks that could cause the model to behave unsafely in certain circumstances, posing risks to both consumers and third parties.

- Information about training data can be used to assess the risk that data contamination makes model evaluations (including evaluations on which consumers rely) less reliable.

Note that even if it is not known in advance precisely why certain metadata is relevant to consumers, this is not an argument for secrecy. Some risks will only be identified once the information is made public, as when an ingredient or chemical is identified as toxic after the fact. There may be highly consequential decisions in training data that are only understood later. Given the current opacity of generative AI, it is reasonable for the public to expect greater transparency. AB-2013 is, in this sense, a precautionary regulation.